02 Oct 23

Data Generation for Deep Fakes: The Art of AI Manipulation in media

Deep fakes — the phenomenon that has emerged through the use of artificial intelligence in media — are both fascinating and concerning. This intriguing but unsettling narrative has emerged in the field of digital content creation due to AI’s use in visual transformation and the media’s ability to influence attitudes. The role of AI in Media production has never been more prominent.

The Connection of Data and Artificial Intelligence

Data is at the heart of every ground-breaking piece of AI technology. AI models become better by analysing large datasets, spotting complex patterns, and producing results that resemble human intelligence.

In this complex process, AI models are trained using a variety of datasets that include details of speech, body motions, and even facial expressions. This plays a crucial part in replicating human expressions, gestures, and behaviour for AI in media Production.

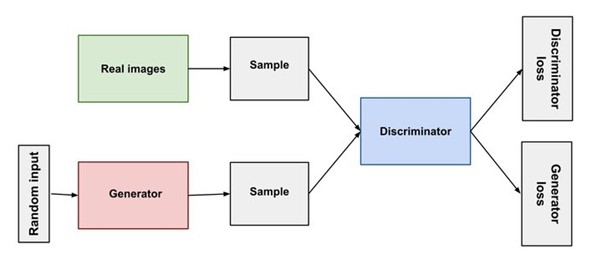

Deep fakes are built on the concept of Generative Adversarial Networks (GANs). These networks have two components: the generator and the discriminator.

The generator generates synthetic data, and the discriminator attempts to discriminate between legitimate and created data. The generator then fine-tunes its output through this iterative process, resulting in content that gradually blurs the borders between authenticity and deception. Perfectly showcasing the power of utilising AI in media.

The core of deep fakes lies in the intricate fusion of advanced AI algorithms with multimedia elements, a prime example of AI in Media at work. These meticulously crafted audio and visual alterations display an astonishing degree of realism, capable of eliciting emotions ranging from awe to alarm. They can seamlessly transpose one individual’s face onto another’s body or modify vocal attributes with startling accuracy, resulting in hyper-realistic videos and images.

The creation of convincing deep fakes demands a substantial volume of data. It necessitates assembling an extensive dataset encompassing a wide array of the subject’s characteristics. This compilation includes various angles, facial expressions, lighting scenarios, and environmental contexts. Additionally, an extensive library of audio samples is crucial for accurately mimicking speech nuances and vocal traits in instances of voice alteration, showcasing the profound capabilities of AI in Media in data generation and manipulation.

The Journey from Data to Deep Fakes

· Data Collection and Preparation

The first step is to build a large dataset customised to the target. This compilation is made up of images and videos from a variety of sources. AI experts then perform meticulous preprocessing on the data, such as aligning face landmarks, extracting important characteristics, for later phases of model training.

· Training the Generative Models

Generative Adversarial Networks (GANs) provide a solid foundation for the generations of deep fakes. The purpose of the generator is to create data that tests the discriminator’s capacity to distinguish it from true data, resulting in a growing tug-of-war. With this push-and-pull dynamic the generator’s ability to produce convincing data is constantly improving with each iteration.

· Fine-tuning for Hyper-Realism

In order to get to a high level of authenticity, AI developers begin tweaking the model parameters and hyperparameters. Doing this optimises the model’s performance against the set benchmarks and this process perfects the model’s ability to create material that is realistic. Further expanding the horizons of what can be achieved through the utilisation of AI in Media.

Figure 2: Generative Model Training (Google)

Navigating the Moral Dilemmas

Amongst the technological marvels of deep fakes, a challenging moral dilemma also develops. The information manipulated through deep fakes, born from the nuanced applications of AI in Media, has the power to destroy trust, alter perceptions, and cause tension. The grey area that develops between authenticity and deception has dangerous implications for the reliability of information as well as the stability of society itself. To address the various issues presented by deep fakes, a comprehensive approach is required.

· Robust Regulatory Frameworks

Governments and technology companies must work together to create solid regulatory frameworks that prevent the abuse of deep fakes. By creating systems to recognise and mark modified content they can leverage AI in Media not just as a tool for creation but also for the critical task of discernment, helping users differentiate between authentic and manipulated media.”

· Empowering through Education

Raising public awareness about deep fakes is essential so that people can be equipped with the critical thinking skills needed to judge the authenticity of content, allowing them to recognise possible red flags. Something that is going to become increasingly necessary in as we enter an age where visual content can be manipulated by using AI in Media.

The fascinating world of deep fakes highlights AI’s capacity for transformation while also emphasising the need for ethical progress. It takes teamwork to strike a balance between ethical considerations and technological growth. Governments, AI researchers, ethicists, and society must work together; to ensure that the innovations stemming from AI in Media are harnessed for the greater good, enhancing societal benefits while curtailing risks.

AI’s Beneficent Impact

The impact of AI extends far beyond the field of deep fakes, driving change in a variety of industries.

For example, AI-powered diagnostics in healthcare improve patient outcomes by precisely analysing medical images. AI systems that detect fraudulent transactions help financial institutions strengthen their security. AI-powered insights enhance decision-making across industries, encouraging innovation and growth.

The exploration of AI manipulation emphasises the vast potential of technology, inviting us to consider its far-reaching implications. It encourages us to consider the future we want—one in which AI improves human potential and directs us toward meaningful growth.

Our duty as data professionals gains importance in this constantly changing world where AI and data overlap to reinvent our capacities as humans. We can direct AI’s trajectory toward a future that transcends limitations and supports the values that define us by encouraging a comprehensive strategy that balances innovation, ethics, and accountability.

Let’s not lose sight of the fact that, as we approach new boundaries, it is up to us all to control AI manipulation’s influence for the benefit of society.

The future of using AI in Media development is not just about technological advancements; it’s about steering those advancements in ways that benefit all of us, preserve truth, and protect individuals’ rights. It’s about recognising the power of what we can create and bearing the responsibility that comes with such power.

The role of data professionals extends beyond the algorithms and datasets in this context. It’s about shaping the ethical framework that will define this technology. It involves collaborating with legal experts, policymakers, educators, and civil society to establish standards that ensure responsible use. The conversation around deep fakes and data generation needs to shift from not just how it’s done, but more importantly, why it’s done, and the implications of its use.

Interested in joining our diverse team? Find out more about the Rockborne graduate programme here.

Related posts

Life at Rockborne20 Jun 25

Federated Learning: The Future of Collaborative AI in Action

Federated Learning: The Future of Collaborative AI in Action The way we build, deploy, and govern AI is evolving, and so are the expectations placed on organisations to do this...

09 Sep 24

Tips to Succeed in Data Without a STEM Degree

By Farah Hussain I graduated in Politics with French, ventured into retail management, dabbled in entrepreneurship, a mini course in SQL and now… I am a Data Consultant at Rockborne....

15 Apr 24

Game Development at Rockborne: How is Python Used?

Just how is Python used in game development? In this blog post, we see the Rockborne consultants put their theory into practice. As the final project in their Python Basics...